Sometimes you get an idea. And it's a fun idea, and it brings together a lot of cool weird things, and you think, "maybe this could actually work out." This post is the story of one such idea. It's also the story of a paper, "Bounds on extra dimensions from micro black holes in the context of the metastable Higgs vacuum," by me and Professor Robert McNees, just posted on the arxiv.

Note added 14 February 2019: See note at bottom of post for important science correction!

A Bubble of Quantum Death

There are several ways the Universe could end, some dramatic, some pathetic, some just outright weird. My personal favorite is vacuum decay, in which the Universe succumbs to an expanding bubble of unimaginable destruction that arises from what could be described as a manufacturer's flaw in the fabric of the cosmos.I first encountered the idea of vacuum decay as a grad student learning about some exotic ideas about dark matter, and in my readings I veered into the territory of some of the classic works examining whether or not our Universe is really as stable as we think. The idea is that it's possible that the fundamental nature of the Universe, what we call the "vacuum state," might not be unique. The Universe could, in principle, be in other vacuum states with different constants of nature and totally unrecognizable laws of physics.

As an abstract concept, this might not be a big deal. Maybe there are other ways the Universe could have been set up -- so what? But here’s the problem: The Universe could transition from one vacuum state to another. And that would kill us all.

The picture looks like this: Maybe there are two possible vacuum states, one at a somewhat higher energy than the other. The higher energy one is called the "false vacuum," and the lower one the "true vacuum." If you're in a true vacuum, you're fine, and the Universe is stable. It's like living on the bottom of a valley -- there's nowhere to fall into. But if you're in a false vacuum, it's like being stuck in a little divot on the side of a cliff with the valley far below. A little bump could send you into the abyss.

There's a connection here to the Higgs field, a sort of energy field that pervades the Universe and is responsible for particles having mass. When I talk about "the vacuum state of the Universe," I’m referring to the Higgs vacuum -- it has to do with properties of the Higgs field. A few years ago, scientists at the Large Hadron Collider completed a decades-long effort to detect the Higgs boson, a particle associated with the Higgs field that finally filled in the missing piece of the Standard Model of Particle Physics. Unfortunately, that discovery came with some ominous news about the state of the Higgs vacuum and the stability of the cosmos.

Sometime in the 60s or 70s, physicists started to explore the possibility that we live in a false vacuum -- a "metastable" universe that is precariously teetering on the edge of disaster. If an extremely high energy event happened somewhere in the Universe, it could kick the Higgs field over the metaphorical cliff and send that little part of the Universe into the true vacuum. Because the true vacuum is more stable than the false one, the transition would spread, creating a bubble of true vacuum within our space that would expand at the speed of light in all directions. This is called vacuum decay, and it's suuuuper fatal.

|

| A diagram from Coleman & de Luccia 1980 showing a false vacuum (right-hand-side valley) and a true vacuum (left-hand-side valley). You can imagine our Universe as a ball sitting in the bottom of the right-hand-side valley, that could either be knocked over the hill into the other one, or tunnel through the barrier between. |

If you're standing next to the vacuum decay event, you have no idea it's happened, and you definitely don't see it coming. When something travels at the speed of light, it can't send a signal ahead -- the first clue that it's occurred is that it's on top of you. And it's fatal in two ways. First, the bubble wall hits, carrying with it extreme energies that incinerate everything in its path. Second, once you're inside the bubble, you're in a kind of space that has different laws of physics. Your atoms don't hold together anymore, and you disintegrate immediately. Of course, you don't notice, because the bubble expands at the speed of light, and your nerve impulses travel far more slowly. So, that's a mercy.

In any case, vacuum decay is bad. And unfortunately, measurements of the properties of the Higgs boson suggest that our Universe is, in fact, metastable, and thus vulnerable to vacuum decay events. The bright side is that it would take an unimaginably huge amount of energy to kick us over the edge. We couldn’t do it ourselves, and we don't know of anything in the Universe that could. And, in fact, the current favored hypothesis for the early universe suggests that if vacuum decay could be triggered, it would have happened in the very early universe, so perhaps it can't happen at all.

|

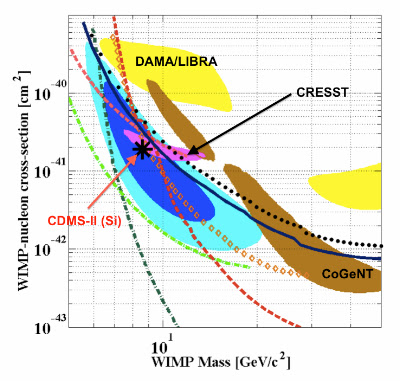

| Figure from Degrassi et al. 2012, showing the possible stability states of the Higgs vacuum based on measurements of the Higgs boson mass and top quark mass. The little dot in the rectangle is where it appears our Universe sits, in the meta-stability region. |

Still, at the moment, we don't really know. Even worse, vaccuum decay can happen in other ways. One particularly unsettling possibility is quantum tunnelling. Just as physicists discovered decades ago that particles can sometimes "tunnel" through barriers, a phenomenon fundamental to quantum theory and practically applied in electronics (like flash memory cards) every day, it's possible, if extraordinarily improbable, that the Higgs field could tunnel right through the barrier keeping it from the true vacuum—and that this could happen literally at any moment. But the probability is so small that throughout the whole observable universe, chances are it will not happen over a timespan much longer than the current age of the Universe which is 13.8 billion years. So, we're probably fine. Probably.

Death by Black Hole?

But there's another possibility. A couple years ago, a group of physicists, Philipp Burda, Ruth Gregory, and Ian Moss, calculated that black holes could also trigger vacuum decay (you can see the paper here). It turns out that if you leave a black hole alone long enough (according to theoretical work pioneered by Stephen Hawking), it'll "evaporate" -- slowly disappearing by letting out a little bit of radiation over time. As it gets smaller and smaller, it evaporates faster and faster, eventually disappearing completely—possibly in a dramatic final explosion.The black holes we know about in the Universe, which range from a few to millions of times as massive as our Sun, would take many, many orders of magnitude longer than the age of the Universe to evaporate -- something north of 10 to the power of 64 years. But a tiny black hole could evaporate much more quickly. What the recent work showed was that when black holes are small enough that they're just about to finish evaporating completely, they can trigger vacuum decay. This is all theoretical of course, but if it's true, it tells us that primordial black holes -- tiny black holes that may have formed in the early universe -- would have to have been above a certain mass, or they’d have already destroyed us.

Which brings us to our new paper. In this work, we show that the fact that tiny black holes haven't killed us all via vacuum decay can tell us something about the possibility that the Universe has more than three dimensions of space. It's a bit of an involved argument, but it's a fun one.

You may have heard people express concerns that the Large Hadron Collider might accidentally create a black hole that could swallow the Earth. (If you're worried about this, I recommend bookmarking the website www.hasthelargehadroncolliderdestroyedtheworldyet.com.) This can't happen for a lot of reasons, which I'll get into, so please don't panic. But scientists at the LHC actually have been hoping to create little black holes, because if they manage to make one (and watch it evaporate immediately), they’ll learn something about the structure of space itself. Those little black holes can only form in the LHC if there are more dimensions of space than we can perceive. And that would be a very exciting discovery. In fact, the lack of production of little black holes in the LHC can tell us that any extra dimensions that might exist have to be really really small.

Now, I just told you that little black holes can be incredibly, universe-destroying-ly dangerous, so why should we not be worried about this? The reason is that while the LHC is the most powerful particle collider ever created by humans, it's NOT the most powerful particle collider in the Universe. Cosmic rays -- high-energy particles that frequently get spit out by the matter swirling around supermassive black holes in other galaxies -- get to much higher energies than whatever the LHC can do, and they occasionally collide with each other out in space. Anything we can do, space can do better. So if the LHC could make little black holes capable of destroying the Universe via vacuum decay, the cosmos would have already done this, billions and billions of times over, all on its own.

But this is also an opportunity. Because just as we can place limits on how big extra dimensions are by not seeing black holes in the LHC, we can place even better limits on them by knowing that little black holes haven’t been created out in space somewhere, because those collisions are at much higher energies. Generally speaking, we wouldn't notice if a cosmic ray collision in a galaxy several million light years away made a tiny black hole. But if Burda, Gregory, and Moss are correct that black hole evaporation can cause vacuum decay, then the production of tiny black holes would have extremely obvious consequences. Or rather, if we notice that we're still here, it hasn't happened.

So in 2016, while Professor McNees and I were chatting at a meeting at Caltech, we had the idea that we could figure out what kinds of particle collisions should make black holes and therefore cause vacuum decay, and we could then use that to place really strong limits on how big extra dimensions can be. While we were working out the details of this, another paper including Gregory, Moss, and some of their colleagues, mentioned this possibility and put forward some additional calculations showing that it's a reasonable thing to do. So we pressed on, and did some quantitative calculations, and found that not only could we get good limits, we could get much better limits on extra dimensions than anything previously published.

Little Curled Up Dimensions

When we talk about the "size" of extra dimensions, what we mean is that if there are other dimensions of space (just, other, hard-to-imagine directions besides up/down, left/right, front/back), they have to be limited in extent, like they're curled up somehow. It's weird to imagine a dimension having a limit, but you can think of it like if you have a sheet of paper where two dimensions are pretty large, but the third (the thickness) is much much smaller. If we lived on the sheet of paper, we could travel a long way up or down or across the page, but not very far into it. In a lot of theories of extra dimensions, the dimensions curl up around on themselves so if you go a little ways into them, you come back to where you started. Extra dimensions of space are a useful idea in physics because they might allow gravity to leak into the extra dimensions kind of like how ink can seep into a sheet of paper.Some of the previous constraints on how big the extra dimensions can be come from things like measuring the force of gravity on very very small scales, around a millimeter, to see if any of the gravity might be leaking out into other dimensions. Then there's the LHC-black-hole-production attempts, and some more complicated calculations involving thinking about how particles carrying gravity in higher-dimensional theories could affect neutron stars and supernovae. The limits on the size of the extra dimensions depend on how many extra dimensions you think we might have, but for two extra dimensions (the most commonly explored possibility), the limits are generally around a millimeter, from measurements of the strength of gravity. Our method pushes that down to a few hundred femtometers -- not much larger than an atomic nucleus. For other methods, the limits are usually expressed in terms of an energy scale connected with the higher-dimensional theory, and in those terms, our limits are five or more orders of magnitude better than limits previously published.

The Fine Print

Of course, there are always caveats to any result in physics. In our case, our analysis depends on a few big assumptions: (1) that the vacuum is metastable (which is supported by measurements in the Standard Model of Particle Physics, but could be disproven if we get evidence of new kinds of physics that change the picture entirely), and (2) that black holes can indeed trigger vacuum decay. There are also some caveats around the energy scale at which the vacuum becomes metastable, and little assumptions about things like the way the extra dimensions are compactified (curled up) or how the cosmic rays happen. But we think this result is an exciting one, and it might present cool new opportunities to explore the stability and structure of our Universe. On the less fun side, this kind of work does suggest that there's really no way the LHC is ever going to make tiny black holes, which is a bummer, because those would be neat. But, if we do see black holes at the LHC, it'll be fantastic evidence that they can't destroy the Universe, which may be some consolation.Theoretical physics is a journey, and sometimes it's a fun one that can take you to really wild places. I hope you've enjoyed taking this little diversion with me -- check out the paper if you want to get into the details, and hang out with me over on Twitter if you want to hear more about science, life, and weird new ways to destroy the Universe.

UPDATE: We Were Wrong About a Thing (but we fixed it)

Sometimes when talking about the self-correcting nature of science, scientists will say things like "Scientists love to be wrong! That's how we make progress!" This is only half true. It's fair to say that being wrong is how we make progress --- at least in the sense that disproving something is almost always more possible than proving something, and so every time someone is able to demonstrate that a theory doesn't work, it gives us a clue about where to go next. (For a more nuanced discussion of this, see this Cosmos article.) But I don't think any of us really love being wrong. At least, I sure don't, and I've never heard anyone proudly announce at morning coffee, "My equations didn't work at all! I have to start over now!" Mostly, when it happens, we get a bit sad, put on a brave face, and try to fix it.So we do what we can to avoid being wrong. In our case, with this paper, we double-checked all our calculations, read a bunch of references, and sent our paper out to a few expert colleagues for comments before putting it on the arxiv. When the comments came back positive, we figured we were on pretty solid ground, so we went ahead and put the paper on the arxiv, with the hope that we would get MORE comments that might help us to see if there were any issues before we sent it to the journal for publication. The good thing about doing it this way is that instead of just the one or two referees you usually get in the peer review process, this opened our paper up to peer review by the entire physics community, which, we figured, gave us the best chance of ensuring our paper had the very best science.

And the process worked! We got an e-mail within a week or so from another physicist who had seen our paper on the arxiv and who happened to have thought about some of these issues before. And he reminded us of something that we had forgotten to take into account in our results. And he pointed out that as a result of the oversight, those amazing limits we got... did not quite hold up.

The issue is a slightly subtle one but the short version is that our analysis has to assume an instability scale --- an energy scale at which the Higgs becomes unstable --- and we can only really draw conclusions about extra dimensions for models with a fundamental scale above that energy. That means that instead of ruling out a huge range of extra dimension models, including the most commonly discussed ones, we're only ruling out a somewhat narrow range of models with scales that hover around the Higgs instability scale. Or, equivalently, a narrow range of extra dimension sizes. So our results plots had to be updated, and our main conclusions revised.

After we made the revisions, we posted the revised version on the arxiv (the site makes it very easy and transparent to do this --- you can always access the previous versions, so everyone knows what's changed). When, after a little over two weeks, no one had contacted us with any more comments, we decided it was time to submit the paper and we sent it to the journal Physical Review D. We settled in for what we expected to be a long wait and a series of back-and-forth revisions, but amazingly, after only a week in review, the journal contacted us to say the paper was accepted! The reviewer only had a short comment: The paper reports an interesting analysis of black hole production in cosmic ray collisions and ensuing constraints on the fundamental scale. I am happy to recommend publication.

Despite the satisfaction of the speedy review and publication, I'll admit it was still a bit of a disappointment to learn that our limits were more, well, limited, than we had originally thought. At first glance, our results had really seemed like they could change the conversation around extra dimensions, with the only major caveat being that you had to take seriously the meta-stability of the vacuum (which, of course, many do). But as it is, the result is still a pretty cool one. This method can tell us something about energy scales that we really seemed to have no way of accessing. What it can tell us may not be what we're trying to learn to rule out the current most favored theories, but that doesn't mean that it won't prove to be a fruitful new direction.

I'm still proud of our work, and I still think that what we did is interesting. We're in a totally different regime right now, and we don't know where things will lead. In physics, you can't always get what you want, but you never know --- it might turn out, someday, to be exactly what you need.